Related post

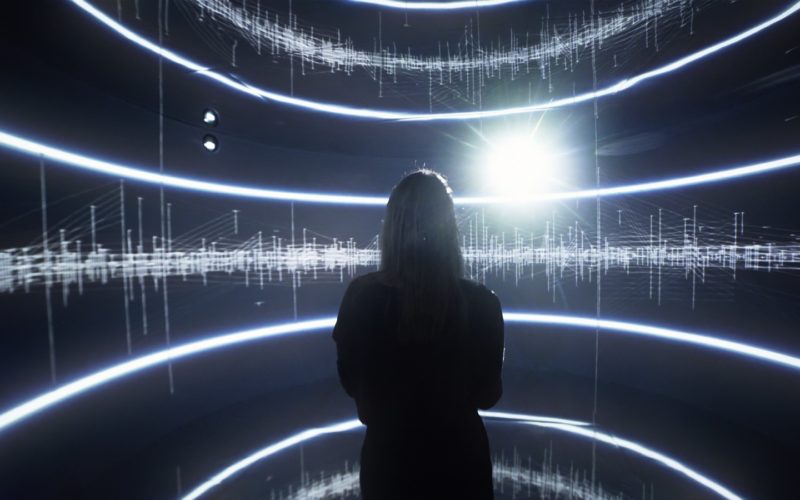

This interactive Fatima Yamaha show projects emotions into light

May 05, 2017

|

Comments Off on This interactive Fatima Yamaha show projects emotions into light

1800

Star Wars Projection Mapping Mural

Jan 18, 2017

|

Comments Off on Star Wars Projection Mapping Mural

2802

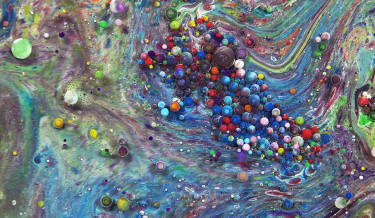

Paint, Oil, Milk, and Honey Mix in this Surreal Macro Video of Swirling Liquids by Thomas Blanchard

Aug 13, 2015

|

Comments Off on Paint, Oil, Milk, and Honey Mix in this Surreal Macro Video of Swirling Liquids by Thomas Blanchard

3441