Related post

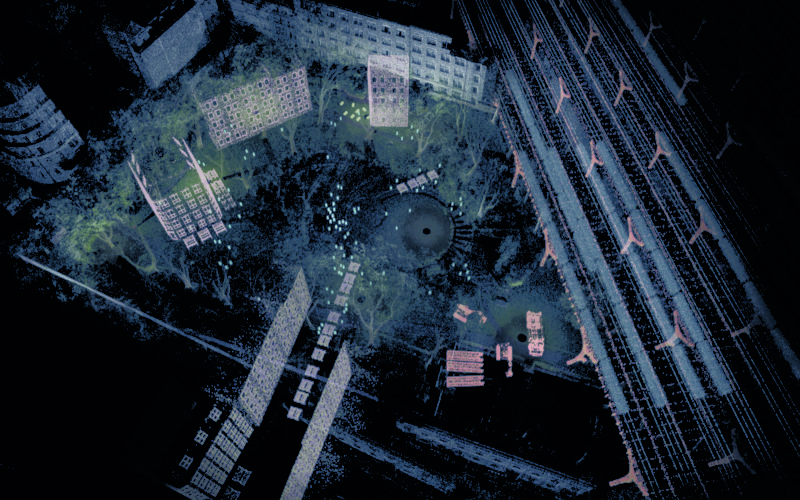

Fall into this Elevator Shaft Audiovisual Installation

Jan 13, 2016

|

Comments Off on Fall into this Elevator Shaft Audiovisual Installation

2183

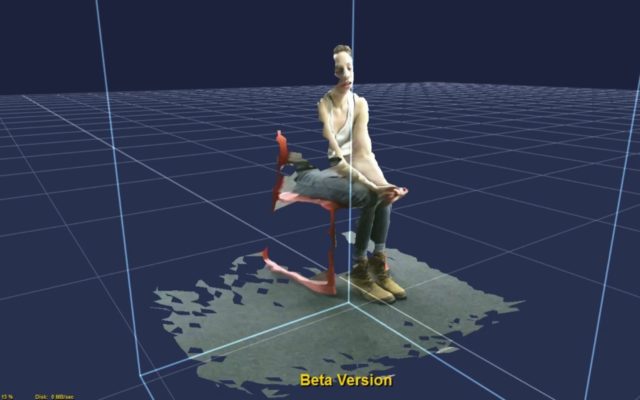

AR and VR: Two sides of the same coin?

Sep 19, 2018

|

Comments Off on AR and VR: Two sides of the same coin?

1727