Related post

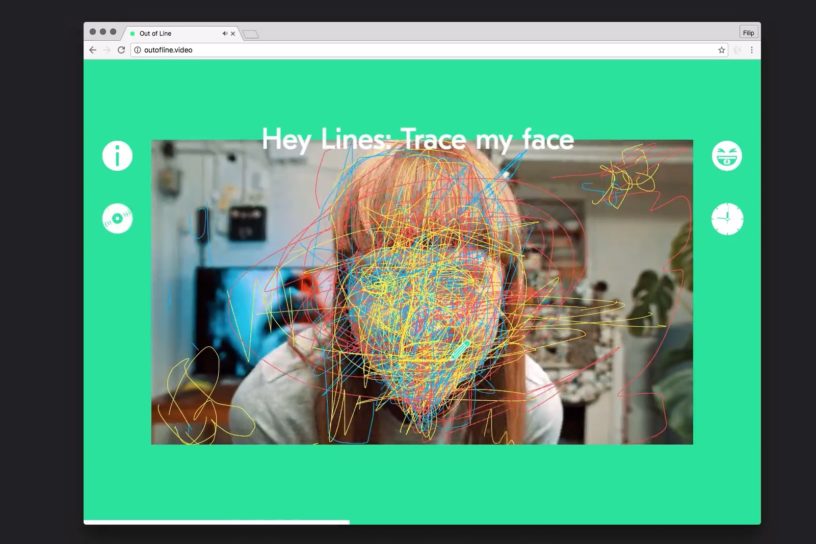

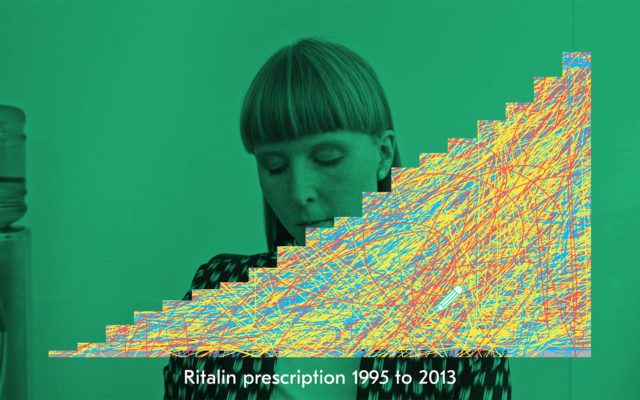

Facehacking: how it was in 2014

Jan 12, 2015

|

Comments Off on Facehacking: how it was in 2014

2812

260-Laser Light Art Installation Hits Houston

Mar 08, 2017

|

Comments Off on 260-Laser Light Art Installation Hits Houston

4224