Related post

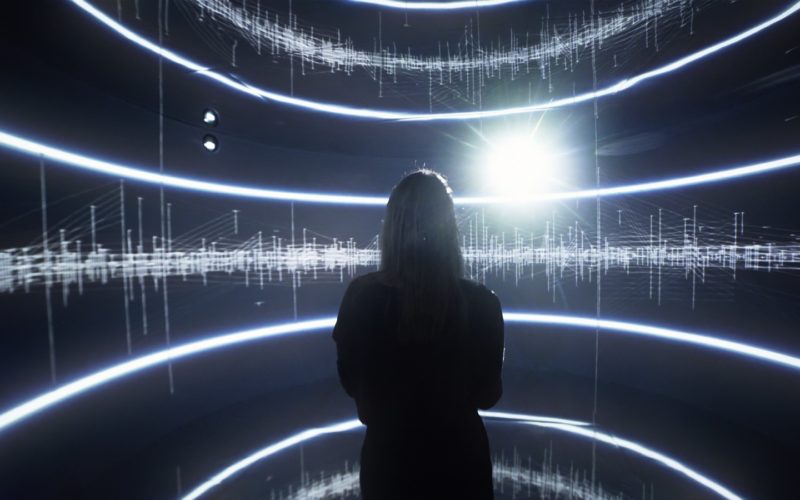

“The Animal is Absent” a Choreographed Light Show

Mar 31, 2016

|

Comments Off on “The Animal is Absent” a Choreographed Light Show

5189

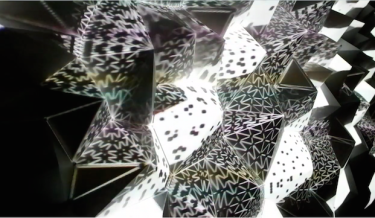

103 Paper Pyramids Come Alive When Origami Meets 3D Light Art

Oct 12, 2015

|

Comments Off on 103 Paper Pyramids Come Alive When Origami Meets 3D Light Art

5351

DUST IS A VIRTUAL REALITY PIECE

May 19, 2017

|

Comments Off on DUST IS A VIRTUAL REALITY PIECE

1825