Related post

An Incredible A/V Light Show Brings the Walt Disney Concert Hall to Life

Mar 01, 2017

|

Comments Off on An Incredible A/V Light Show Brings the Walt Disney Concert Hall to Life

2762

Rino Stefano Tagliafierro: Optogram “Bloody Faces”

Mar 18, 2016

|

Comments Off on Rino Stefano Tagliafierro: Optogram “Bloody Faces”

2257

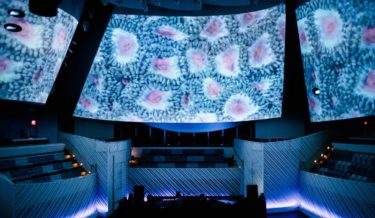

A Magical Coral Orgy Took Over Miami’s New World Center

Apr 07, 2017

|

Comments Off on A Magical Coral Orgy Took Over Miami’s New World Center

2658